Abhijit Banerjee, Esther Duflo and Michael Kremer were awarded the Nobel Prize in Economics (2019) for pioneering research into the use of experimental approaches to fight global poverty (Biswas, 2019) . They used RCTs to test the effectiveness of programs to alleviate poverty. Today, Randomised Control Trials are known to be among one of the most popular, or a ‘gold standard method’ to measure programmatic impact.

So, what is a randomised control trial?

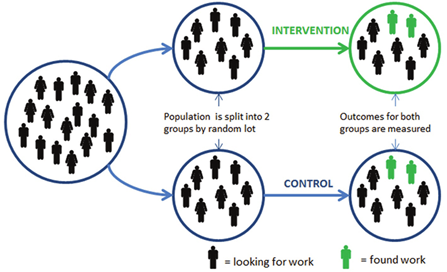

Under this method, program beneficiaries and non-beneficiaries are exclusively selected through random assignment within a population prior to the intervention. Beneficiaries with whom the intervention is administered is called the treatment group; while, those participants who do not receive the benefits of the program are assigned to another group called the ‘control group’. Changes in group indicators are tracked over time , to compare and measure impact.

Let us understand the concept with the help of following example:

Source: (Torgerson, 2012)2

Job seekers are randomly assigned to treatment and comparison groups for a program providing employment support. After running the intervention, it was found that only two people were employed among those who received the benefits of the intervention. Similarly, among the group which did not receive benefits, only two found work. One can thus conclude that the group which received the intervention was no better than the ones which did not. Hence, one can conclusively say that the intervention was ineffective, and did not cause an increase in the rate of employment among job-seekers.

What makes them so popular?

The randomised selection of participants and non-participants, helps eliminate selection bias – attributes that may make the treatment group systematically different from the control group, and thus interfere with the outcomes of the experiment. This helps estimate the causal impact of the intervention in an unbiased manner.(Gibson Michael & Sautmann Anja, n.d.)

Source: (Simister, 2017)

The process of artificially inducing exogenous variations – external influences that may affect outcome indicators -through randomization, helps eliminate selection bias. This is why RCTs are understood as an experimental method within evaluation (Simister, 2017)- considered an optimum approach towards estimating the impact of the project or intervention. (Singh Kultar et al., 2017) Conducting Randomised Control Trials to evaluate the impact of a program/intervention involves following steps:

List of recommended resources:

For a broad overview

JPAL’s website provides a comprehensive overview of Randomised Control Trials. It discusses the various elements of an RCTs, and takes the reader through the course of the entire project cycle – project planning, research design, data collection, data processing and data analysis. It also provides teaching resources such as webinars by eminent faculty.

JPAL’s website provides a comprehensive overview of Randomised Control Trials. It discusses the various elements of an RCTs, and takes the reader through the course of the entire project cycle – project planning, research design, data collection, data processing and data analysis. It also provides teaching resources such as webinars by eminent faculty.

Published by UNICEF, this document gives a brief introduction to RCTs, and guides practitioners on the relevant contexts in which it can be used. It also takes the reader through the various steps involved in RCTs, and enlists best practices along with challenges in implementation.

This document gives an overview of RCTs, and explains the various concepts in conducting them. It also throws light on its strengths and weaknesses, and the recent debates regarding the use of this method in evaluations.

Written by Carlos Barahona, this paper guides practitioners on the use of RCTs by giving an overview of the origin of the method, its’ rationale, and debates. It also explains the application of this method, and the opportunities and challenges in the impact evaluation of developmental initiatives.

Developed by UNICEF Innocenti, this video summarises key features of Randomised Control Trials using simple infographics.

Published by Better Evaluation and presented by Howard White from 3ie, this webinar gives a broad overview of RCTs. It explains the concept of randomisation using a case study, and the various designs including Pipeline, Raised Threshold, Matched Pairs, AB Comparisons, Multiple Treatment Arms and Encouragement design, which practitioners can use.

For in depth understanding

Published by Oxford, this book offers a critical perspective of Randomised Control Trials. The book discusses the issues, challenges and limitations associated with RCTs.

Case Study

JPAL in this case study measure impact of microfinance in 6,800 households in 104 slum communities in Hyderabad. It discusses details of the intervention, context and results of the evaluation. The webpage has the link to the database and research paper for further details.

JPAL in this case study measures the impact of Maternal Literacy and Participation Programs for Child Learning in around 9,000 households in 480 villages. It discusses details of the intervention, context and results of the evaluation. The webpage has the link to the database and research paper for further details.

JPAL’s entire searchable data base of evaluation resources and policy publications can be viewed through this website. You can JPAL’s repository of datasets from RCTs by clicking on the link here.

Toolkits

The paper written by Esther Duflo, Rachel Glennerste and Michael Kremer provides a toolkit for researchers, students and practitioners wishing to introduce randomisation as part of a research design in the field. It discusses in detail the idea of randomisation, sample size, practical designs and implementation issues, analysis and inference issues in RCTs.

Further Reading

Published by J-PAL, this resource intends to help practitioners who have general understanding of randomized evaluations and want to overcome challenges – such as small sample sizes, and strict eligibility criterias – in conducting RCTs.

Written by Martin Ravallion, this paper takes stock of continuing debates about the merits of RCTs. It questions the unconditional preference for this method using three arguments – i.e. the ethical objections to RCTs, distortion of evidence base to inform policy making and preference given to it , being unclear on a priori grounds.

Written by David Roodman, this article discusses rapid rise of RCTs and highlights impediments and disadvantages in using this method.